There are a lot of queries that are fine when you’re site is small, but take ages as soon as you start to collect some data. Therefore it’s very important to monitor query performance. We usually track at least the following things:

- total time spent on SQL queries

- total time spent on rendering a page

- queries that took more than a certain threshold (query and time)

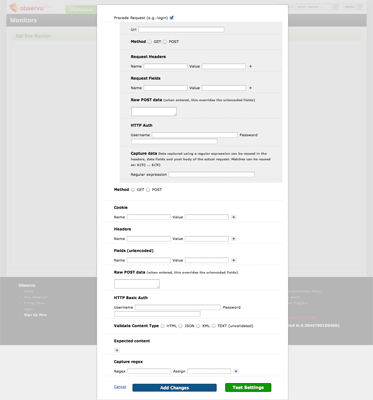

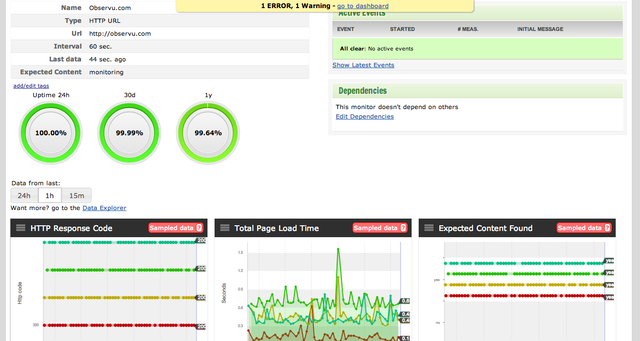

We log these, so we can quickly discover bottlenecks. (using the Observu server agent, we also store these in Observu for a quick overview and the ability to receive notifications when it happens)

Many frameworks such as Zend Framework have built in SQL profilers which can already do these things, you just need to check out the documentation.

After you found the culprits, it’s recommended to run them manually, prefixed with EXPLAIN. Often you will have forgotten to add an index or your index does not match the use of your query.

There are however some query patterns you can already watch out for when writing and reviewing your code. We’ve encountered these again and again as our databases grew larger:

SELECT ..... ORDER BY created_date DESC LIMIT 0,7 to get the most recent items

This becomes slow as the database grows larger even if there is an index on created_date. The way to counter this is to actually make use of that index by adding a condition that limits the amount of data involved, like: created_date >= ‘{date_7_days_ago}’

(it’s recommended to generate this date in code and round it to a date and a 00:00 time, so the result can be cached)

SELECT .......... LIMIT 500000,10 created by paging code on a large table

This one is harder to prevent, however there are some approaches:

- Do not sort the data, but have it returned in it’s natural order.

- Do not use LIMIT, but use actual conditions on the dimension which you order the results by. (e.g. a range of ID’s or dates)

- Just disallow browsing this deep into the data, will users really need this? Or is the ability just an oversight, which only gets triggered by search engines

SELECT ..... ORDER BY rand() LIMIT 10 to select random items

This is a very common way to select random items, that does not work at all as soon as you have more than a few thousand items. What happens is that MySQL will first have to generate a random number for each entry in the database, before being able to select the 10 to display.

The way around this is to first determine the range of ID’s to select from. ( SELECT MIN(id), MAX(id) FROM mytable )

Then generate a random id between MIN(id) and MAX(id)-1 and an upper bound, usually something like random_id+1000.

Finally, find a random item by querying SELECT * FROM mytable WHERE id>={random_id} AND id < {upper_bound} ORDER BY id ASC LIMIT 1.

This efficient way to retrieve a random item from a MySQL table can also be applied to multiple items. For really random, just repeat the procedure. However, in most cases, you don't need a really random set and you can just use something like:

SELECT * FROM mytable WHERE id>={random_id} AND id < {upper_bound} ORDER BY rand() LIMIT 10